Building an application platform with LKE and Argo CD

TL;DR

This article is intended to show how to set up an LKE cluster, bootstrap it with Argo CD Autopilot and install ExternalDNS and Traefik.

I have been a customer of Linode for a while now and being that I am a platform engineer at my day job, I wanted to build myself a platform on LKE(Linode Kubernetes) for several of my home projects and side hustles. Doing what any engineer my age does first I "googled" it. On a side note, I want to mention that it looks like safari is technically switching to duck-duck-go for its default search engine. We can talk more about that later. While I did find many good articles and videos in my search, I did not find one that centers around GitOps and more specifically Argo CD. I am a huge fan of both GitOps and Argo CD so I wanted to start my platform with those in mind.

Wanting to start my platform with Argo CD and having used Argo CD before, I knew they had a project called Argo CD Autopilot. It is specifically intended for bootstrapping a new Kubernetes cluster with Argo CD and providing a good opinionated project code structure. I wanted to give the Argo CD Autopilot project a try to see if it would help get me moving quicker.

Deploying your LKE cluster

Linode has many good docs and guides. They have several on how to set up your LKE cluster and configure your local kubectl config. I will leave that up to them to explain as they will do a much better job than I will.

I used the following article, but they have many others as well: https://www.linode.com/docs/guides/lke-continuous-deployment-part-3

For the rest of this article, I will assume you have a running LKE cluster. The size of the cluster is not important as this should run on their minimum-sized cluster.

Bootstrapping with Argo CD Autopilot

Next, we will want to get our new Kubernetes cluster started right by bootstrapping all of the applications with Argo CD. Argo CD has a project called Autopilot with the specific goal of making GitOps easier.

Here is a link to their homepage: https://argocd-autopilot.readthedocs.io/en/stable/

To get started using Argo CD Autopilot you will need:

- a git repo (I use GitHub)

- a token for your git repo

- the argocd-autopilot command for your OS installed

- a kubernetes cluster (In our case LKE)

- kubectl configured to connect to your kubernetes cluster

Argo CD Autopilot does a good job of documenting their cli and we will be following their getting started guide here: https://argocd-autopilot.readthedocs.io/en/stable/Getting-Started/

First, you will need to export your git token:

export GIT_TOKEN=ghp_PcZ...IP0

Next, you will need to export the git repo you would like to store your code in.

export GIT_REPO=https://github.com/owner/name/some/relative/path

Then you will simply execute the bootstrap command to get your new LKE cluster up and running with Argo CD

argocd-autopilot repo bootstrap

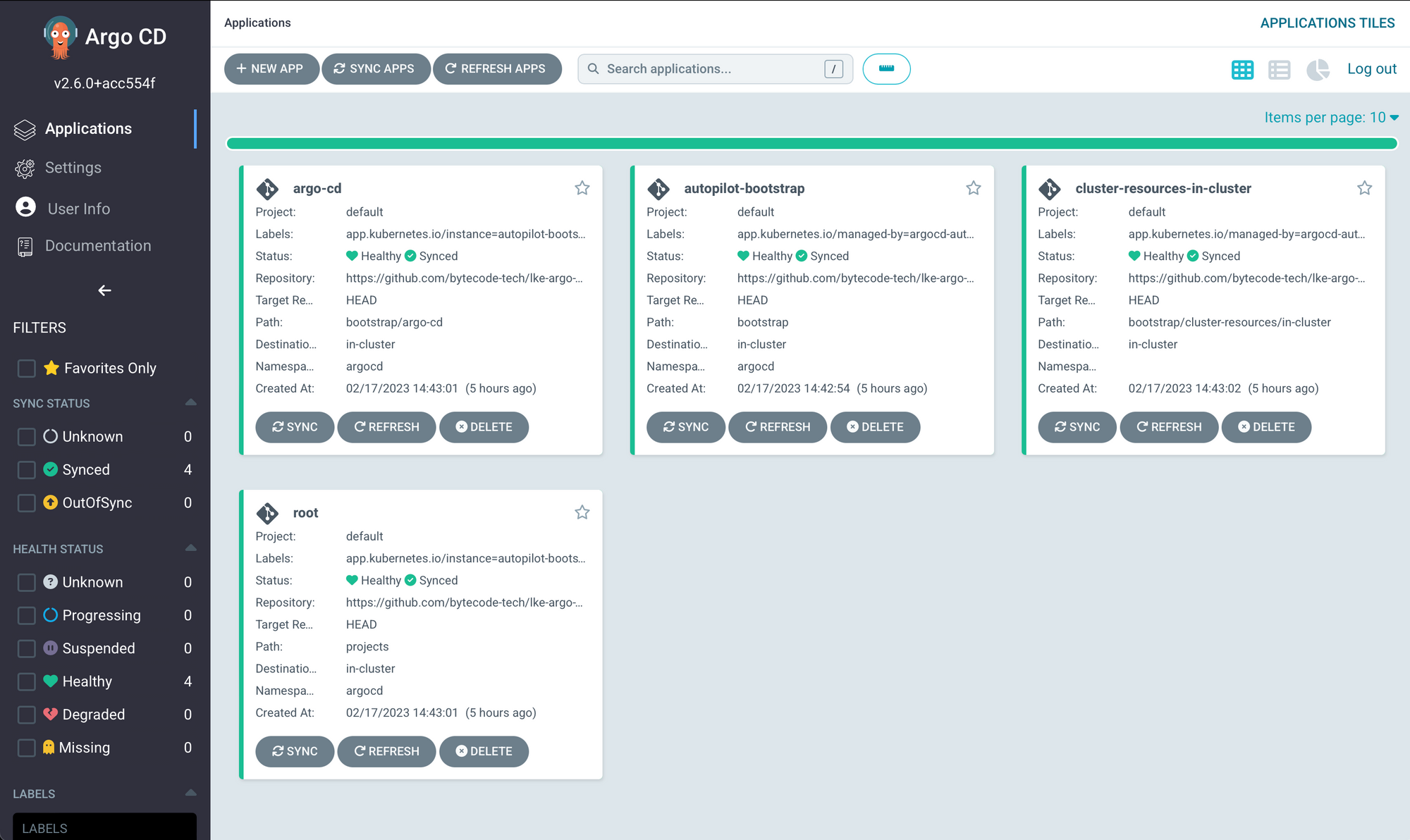

Congratulations!! You should have Argo CD running on your cluster and connected to your git repository to deploy automatically based on your git commits. The code that was generated will be pushed to the git repository that was specified with the GIT_REPO environment variable. You should be able to connect to the Argo CD UI using a local forward like this:

kubectl port-forward -n argocd svc/argocd-server 8080:80Using a browser to access the address: http://localhost:8080 and using the username: admin and password given during the bootstrap command, you will get the Argo CD UI.

If you missed the password at setup you should be able to use this command to retrieve it:

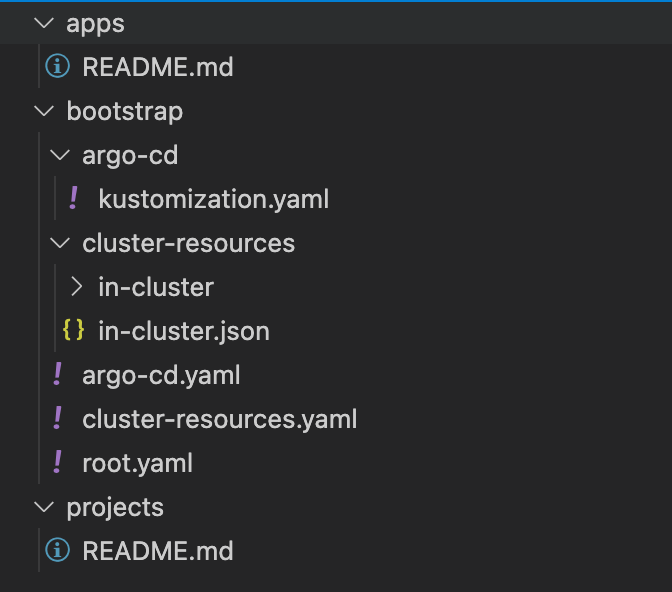

kubectl -n argocd get secret argocd-initial-admin-secret -o jsonpath="{.data.password}" | base64 -dIf you look at the git repo used during the repo export command you should have a directory structure that looks like this:

Argo CD Autopilot gives a good starting code structure to organize your code. It has the following base dirs:

- app - This is where deployed application specifications live

- bootstrap - This is where all of the applications and manifest live to bootstrap the cluster with Argo CD, including Argo CD itself.

- projects - This is where all the Argo CD projects are defined.

git clone git@github.com:owner/name/some/relative/path.gitAdding ExternalDNS to the mix

Argo CD and GitOps give us a great start, but it would be nice if every service that is started on our platform was able to be accessed by name. Enter ExternalDNS! In their own words, "ExternalDNS makes Kubernetes resources discoverable via public DNS servers. Like KubeDNS". ExternalDNS has many integrations with DNS providers, but we are going to couple it with Linode Domains. Linode Domains is the DNS service that Linode provides that allows you to manage DNS for your domains and it's FREE!!!

To get started we need the following:

- Domain setup in Linode Domains

- Linode API key

The integration between Linode Domains and ExternalDNS is fairly well documented here but I will repost the RBAC deployment as I made some changes.

apiVersion: v1

kind: ServiceAccount

metadata:

name: external-dns

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: external-dns

rules:

- apiGroups: [""]

resources: ["services","endpoints","pods"]

verbs: ["get","watch","list"]

- apiGroups: ["extensions","networking.k8s.io"]

resources: ["ingresses"]

verbs: ["get","watch","list"]

- apiGroups: [""]

resources: ["nodes"]

verbs: ["list"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: external-dns-viewer

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: external-dns

subjects:

- kind: ServiceAccount

name: external-dns

namespace: external-dns

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: external-dns

spec:

strategy:

type: Recreate

selector:

matchLabels:

app: external-dns

template:

metadata:

labels:

app: external-dns

spec:

serviceAccountName: external-dns

containers:

- name: external-dns

image: registry.k8s.io/external-dns/external-dns:v0.13.2

args:

- --source=ingress

- --source=service

- --provider=linode

- --domain-filter=example.com # (optional) limit to only example.com

- --txt-prefix=xdns-

env:

- name: LINODE_TOKEN

valueFrom:

secretKeyRef:

name: linode-api-token

key: tokenThere are a few things I would like to note about my configuration as compared to the configuration in the Linode ExternalDNS example. If you look at the "args" section of the spec you will notice that I have added 2 more args. The first of which is another source arg.

This is done so that when we start creating ingresses, the ExternalDNS controller will watch ingress definitions also. I find this helpful so that I can place all my annotations related to ingress and DNS in one spot on the ingress definition.

This option is in the example but I think it's very important to mention here. If you are running multiple instances of ExternalDNS against a DNS provider you will want to set this filter. If you do not then the ExternalDNS instances will collide with each other and modify each other's DNS entries. This might happen for instance, if you are running multiple clusters.

The next additional arg added is to specify a prefix for the txt DNS records that are added. This is used so that there will not be collisions if a CNAME record is created in Linode because CNAME records and TXT records CAN NOT be named the same. ExternalDNS uses some logic to decide whether to create a CNAME or an A record depending if it detects a load balancer or not (This is explained in this article). CNAME and TXT collisions showed up in my log files when I was originally doing the integration but have since gone away. I believe they were related to this issue, which should have been fixed in version 12.2 but I was still seeing the issue. I need to investigate more.

To deploy the ExternalDNS manifest we are going to use Argo CD. In order to accomplish this we will need to add an Argo CD application specification along with the ExternalDNS manifest that we just looked at to the bootstrap directory of our Argo CD application. Argo CD Autopilot cli has many handy features for creating projects and applications but unfortunately, they do not have one for creating bootstrap applications so we will have to do this manually.

Create the following files in the locations specified.

#./bootstrap/external-dns.yaml

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

creationTimestamp: null

labels:

app.kubernetes.io/managed-by: argocd-autopilot

app.kubernetes.io/name: external-dns

name: external-dns

namespace: argocd

spec:

destination:

namespace: external-dns

server: https://kubernetes.default.svc

ignoreDifferences:

- group: argoproj.io

jsonPointers:

- /status

kind: Application

project: default

source:

path: bootstrap/external-dns

repoURL: https://github.com/owner/repo.git

syncPolicy:

automated:

allowEmpty: true

prune: true

selfHeal: true

syncOptions:

- allowEmpty=true

- CreateNamespace=true

status:

health: {}

summary: {}

sync:

comparedTo:

destination: {}

source:

repoURL: ""

status: ""

In this file, you will need to change the source repo to match your git repo.

repoURL: https://github.com/owner/repo.gitThen we will need to add the ExternalDNS manifest to a new directory we create under the bootstrap directory like this.

mkdir ./bootstrap/external-dns#./bootstrap/external-dns/external-dns.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: external-dns

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: external-dns

rules:

- apiGroups: [""]

resources: ["services","endpoints","pods"]

verbs: ["get","watch","list"]

- apiGroups: ["extensions","networking.k8s.io"]

resources: ["ingresses"]

verbs: ["get","watch","list"]

- apiGroups: [""]

resources: ["nodes"]

verbs: ["list"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: external-dns-viewer

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: external-dns

subjects:

- kind: ServiceAccount

name: external-dns

namespace: external-dns

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: external-dns

spec:

strategy:

type: Recreate

selector:

matchLabels:

app: external-dns

template:

metadata:

labels:

app: external-dns

spec:

serviceAccountName: external-dns

containers:

- name: external-dns

image: registry.k8s.io/external-dns/external-dns:v0.13.1

args:

- --source=ingress

- --source=service

- --provider=linode

- --domain-filter=example.com # (optional) limit to only example.com

- --txt-prefix=xdns-

env:

- name: LINODE_TOKEN

valueFrom:

secretKeyRef:

name: linode-api-token

key: token

You will notice in the external-dns manifest we specified the Linode API token needed by external-dns to access your Linode account like this:

env:

- name: LINODE_TOKEN

valueFrom:

secretKeyRef:

name: linode-credentials

key: token

The valueFrom:secretKeyRef: allows us to tell Kubernetes to pass in a secret key for this env value. We will use this mechanism so that we do not have to commit our secret Linode token to source control.

We will create our Linode secret using the kubectl command. To create a secret using kubectl issue this command with kubectl connected to the appropriate Kubernetes context. We will also issue a command to create the namespace. Normally argo-cd will do this, but in this instance, we need the secret before argo-cd has run gitops.

kubectl create namespace external-dns

kubectl create secret generic linode-api-token -n external-dns --from-literal=token='token_goes_here'

After this secret is created you are ready to commit the source code in your project created by Argo CD. You can issue these commands from the repository you cloned previously.

git add .

git commit -m "adding externalDNS"

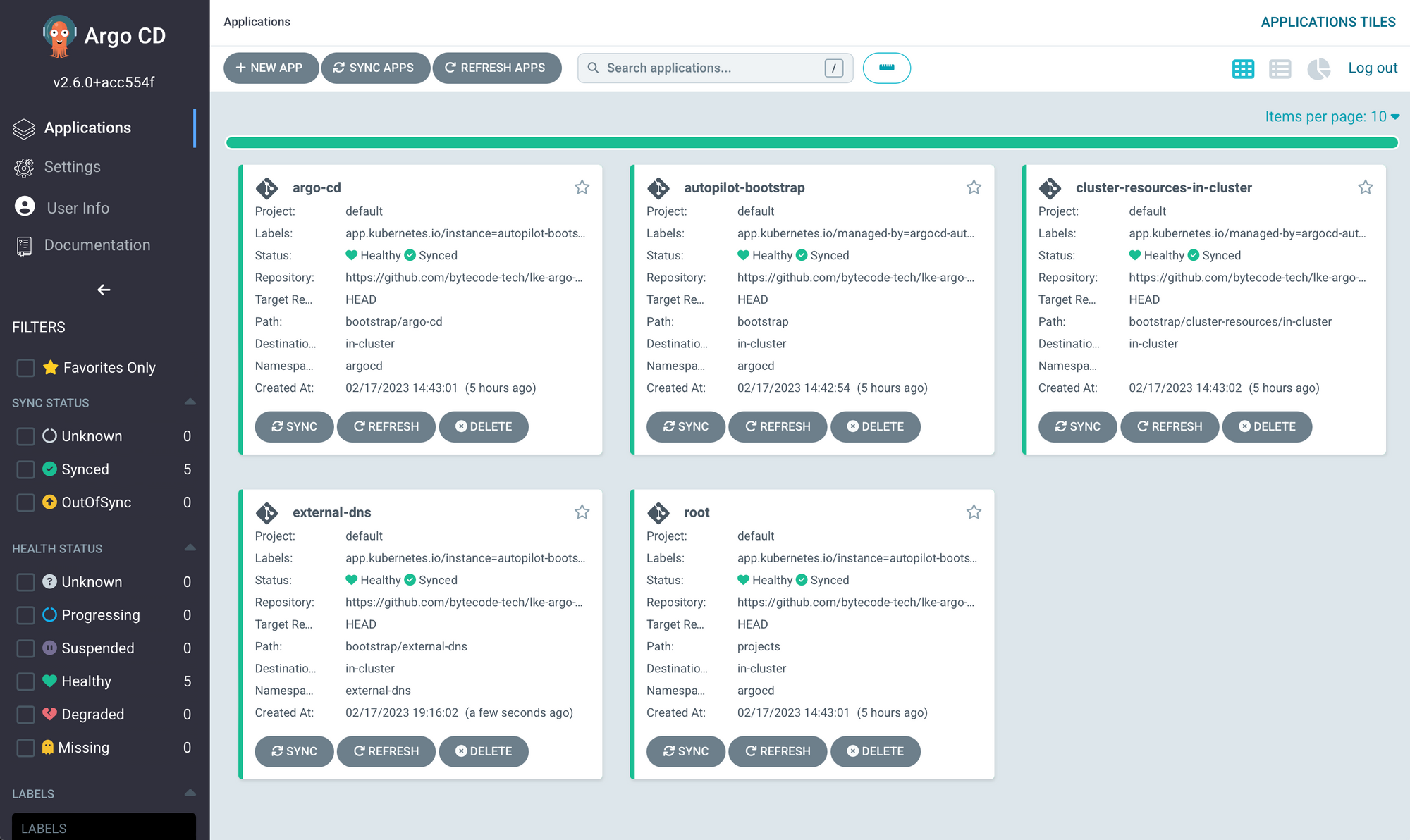

git push originArgo CD will see the commit and perform all of its GitOps duties. Once deployed you should see something like this from localhost:8080 if you are still running the port-forward command to Argo CD's service.

Congratulations!! You now have a working externalDNS.

Adding Ingress

The next thing needed to have a good functioning platform is an ingress implementation. There are many Kubernetes ingress implementations out there but I have come to like Traefik.

Implementing Traefik is fairly simple and that is one of the main reasons that I like using it. Just like in our ExternalDNS implementation, we will need to add a few files to the Argo CD bootstrap directory. First, we will add the application specification for Traefik as so:

#./bootstrap/traefik.yaml

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

creationTimestamp: null

labels:

app.kubernetes.io/managed-by: argocd-autopilot

app.kubernetes.io/name: traefik

name: traefik

namespace: argocd

spec:

destination:

namespace: traefik

server: https://kubernetes.default.svc

ignoreDifferences:

- group: argoproj.io

jsonPointers:

- /status

kind: Application

project: default

source:

path: bootstrap/traefik

repoURL: https://github.com/owner/repo.git

syncPolicy:

automated:

allowEmpty: true

prune: true

selfHeal: true

syncOptions:

- allowEmpty=true

- CreateNamespace=true

status:

health: {}

summary: {}

sync:

comparedTo:

destination: {}

source:

repoURL: ""

status: ""

Again, In this file, you will need to change the source repo to match your git repo.

repoURL: https://github.com/owner/repo.gitNext, we need to create a traefik directory to put the traefik manifest and CRD in like this:

mkdir ./bootstrap/traefikThen we need to create the traefik manifest.

#./bootstrap/traefik/traefik.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: traefik-account

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: traefik-role

rules:

- apiGroups:

- ""

resources:

- services

- endpoints

- secrets

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io

resources:

- ingresses

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: traefik-role-binding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: traefik-role

subjects:

- kind: ServiceAccount

name: traefik-account

namespace: traefik

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: traefik-deployment

labels:

app: traefik

spec:

replicas: 1

selector:

matchLabels:

app: traefik

template:

metadata:

labels:

app: traefik

spec:

serviceAccountName: traefik-account

containers:

- name: traefik

image: traefik:v2.9

args:

- --api.insecure

- --api.dashboard=true

- --providers.kubernetesingress

- --providers.kubernetesingress.ingressendpoint.publishedservice=traefik/traefik-web-service

- --entrypoints.web.address=:80

ports:

- name: web

containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: traefik-web-service

spec:

type: LoadBalancer

ports:

- name: web

targetPort: web

port: 80

selector:

app: traefikThe key things to notice in the traefik.yaml files are the args and the ports. Let's talk about the args first. Here is the one we want to pay special attention to:

- --providers.kubernetesingress.ingressendpoint.publishedservice=traefik/traefik-web-service

- --entrypoints.web.address=:80

The arg in our list "publishedservice" is a namespace/service argument for the service that is responsible for publishing the ingresses.

The "entrypoints" args are used to enable access from the outside and associate it with a port. Currently, we will just open up port 80 we will address SSL in the future.

Again we will need to commit our code changes to our git repo like this:

git add .

git commit -m "adding Traefik ingress"

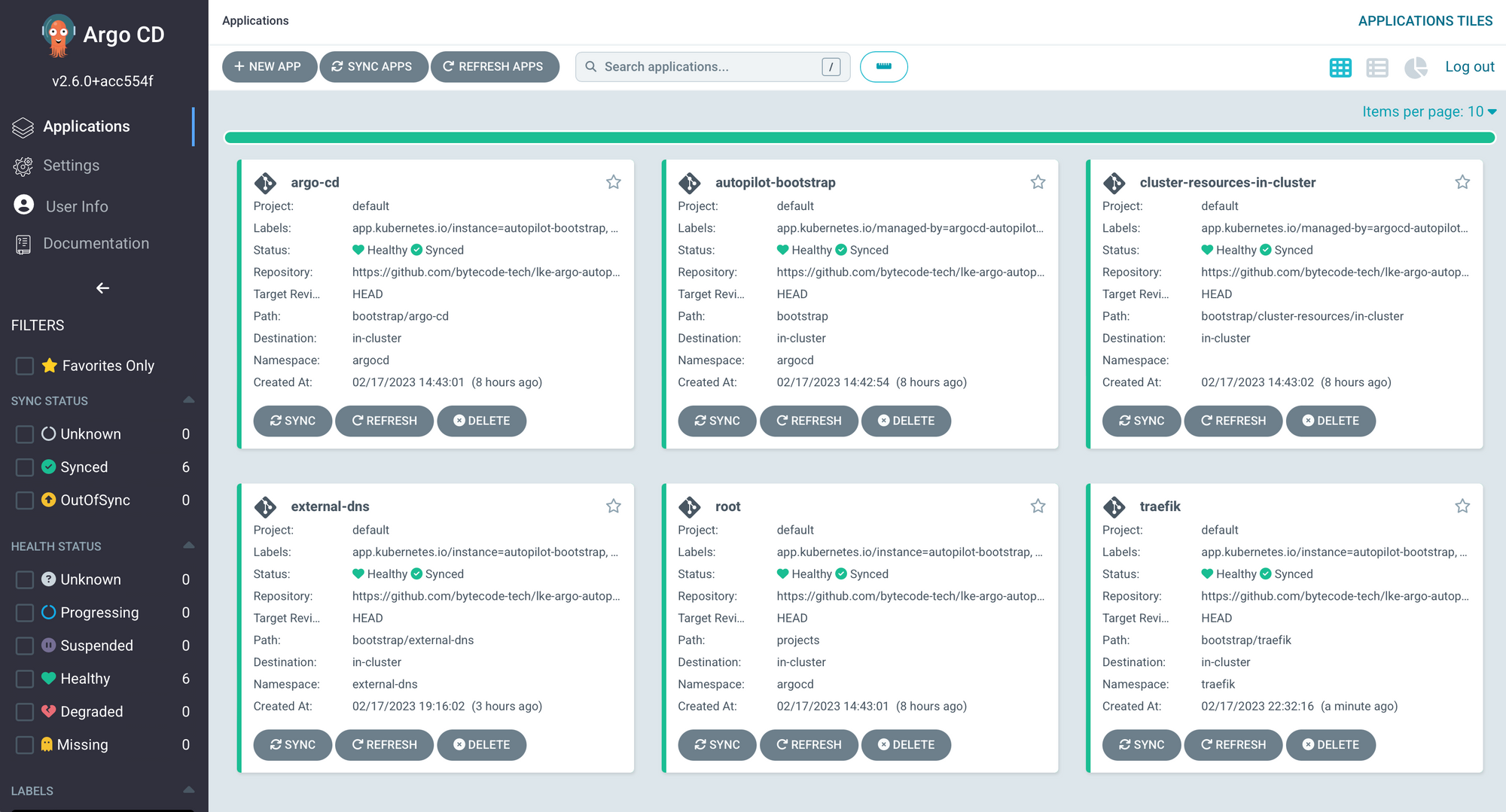

git push originOnce you commit these files and Argo CD refreshes you should see something similar to this:

Let's Deploy an App

So that we can check all of our hard work let's deploy a simple app using Argo CD. As I said before Argo CD Autopilot has a good cli and we can use that to create our Argo CD project and application.

Let's first create a project list this:

argocd-autopilot project create testNext, we will need to create the application specification that Argo CD will use to manage the application. Currently, Autopilot only supports creating applications from a Kustomization specification. Some people see this as a limitation, but as mentioned in this issue. Kustomize allows a native way to import HELM charts if that is what the application is specified.

Argo CD Autopilot cli will need a few things for it to create the application. One of the things it will need is the initial Kustomization file you want to use to create your application. It can get the Kustomization file from a git repo or a local file system. We are going to use a local file system to make things easier. You will need to create the following files on your local system somewhere outside of the Argo CD Autopilot repo.

#kustomization.yaml

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

resources:

- deployment.yaml#deployment.yaml

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: whoami

labels:

app: whoami

spec:

replicas: 1

selector:

matchLabels:

app: whoami

template:

metadata:

labels:

app: whoami

spec:

containers:

- name: whoami

image: traefik/whoami

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: whoami

spec:

ports:

- name: http

port: 80

selector:

app: whoami

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: whoami-ingress

annotations:

external-dns.alpha.kubernetes.io/hostname: whoami.yourdomain.com

traefik.ingress.kubernetes.io/router.entrypoints: web

spec:

rules:

- host: whoami.yourdomain.com

http:

paths:

- path: /

pathType: Exact

backend:

service:

name: whoami

port:

number: 80

Few things to mention here. You will need to change:

whoami.yourdomain.comTo a domain that you own and which is configured in Linode Domains.

Next, we will run the command to create the application in your Argo CD repo.

argocd-autopilot app create whoami --app ./path/to/kustomization/dir --project testYou should now be able to access HTTP//:whoami.yourdomain.com in your browser of choice.

Closing

Yay!! You did it. You should now have a platform that can:

- Deploy an application using GitOps

- Automatically create DNS records

- Automatically setup Ingress for a service

In my next post, I will show how to add automatic SSL to the mix with cert-manager and LetsEncrypt.